Jump To Section

- 1 Understanding the Trust Deficit in AI-Driven Experiences

- 2 Designing AI Interfaces Customers Can Trust

- 3 Making Human-Centric AI Interfaces

- 4 Building Transparency for Enterprises and Employees Alike

- 5 Case Insight: Explainable Virtual Assistants Reduce Escalations

- 6 Governance, Compliance, and the Design Advantage

- 7 Measuring AI Transparency and Trust

- 8 Final Takeaway: The Future of AI Transparency

Today, the use of AI is commonplace. In the telecom industry, it is woven into daily operations and customer interactions. Whether customers are getting help from a chatbot, receiving a proactive network alert, or being offered a tailored plan upgrade, there is a good chance that AI is behind the interaction.

Yet research consistently shows that AI adoption falters without transparency.

A 2025 Salesforce study found that 73 percent of customers want to know when they are interacting with AI, and only 42 percent trust companies to use AI ethically, a decline from the previous year.

These numbers underscore a fundamental truth: success of AI in telecom depends on trust.

Customers are reluctant to accept decisions they cannot understand, especially in sensitive areas like billing adjustments, fraud detection, or service disruptions. Designing transparent AI interfaces is a strategic necessity.

Understanding the Trust Deficit in AI-Driven Experiences

The trust deficit in AI-driven telecom experiences is often the result of poorly surfaced rationale.

Telecom customers are increasingly asked to trust AI systems in high-impact scenarios: billing adjustments, service recommendations, fraud detection, and even proactive plan changes.

Yet hesitation is high. According to a 2025 report from the global consumer research platform Attest, only 31 percent of consumers believe AI can improve customer experience. This reflects a fundamental truth in user experience: when AI decisions feel like a black box, adoption stalls.

Opaque decision-making doesn’t just hurt the interface experience. It risks regulatory backlash and customer churn. The long-term reputational stakes for telecoms are significant.

This gives way to a pressing concern: Can we trust what we don’t understand?

AI is adept at recognising patterns but fails when handling complex problems or understanding nuance. These are the moments when customer trust is most fragile.

AI also comes with inherent risks. The massive volumes of personal data it processes can be mishandled or breached. There are privacy concerns when sensitive information is used without clear explanation, and the possibility of bias when models make decisions influenced by factors like postal codes or names. False fraud alarms can further erode trust, particularly when they interrupt service for legitimate customers.

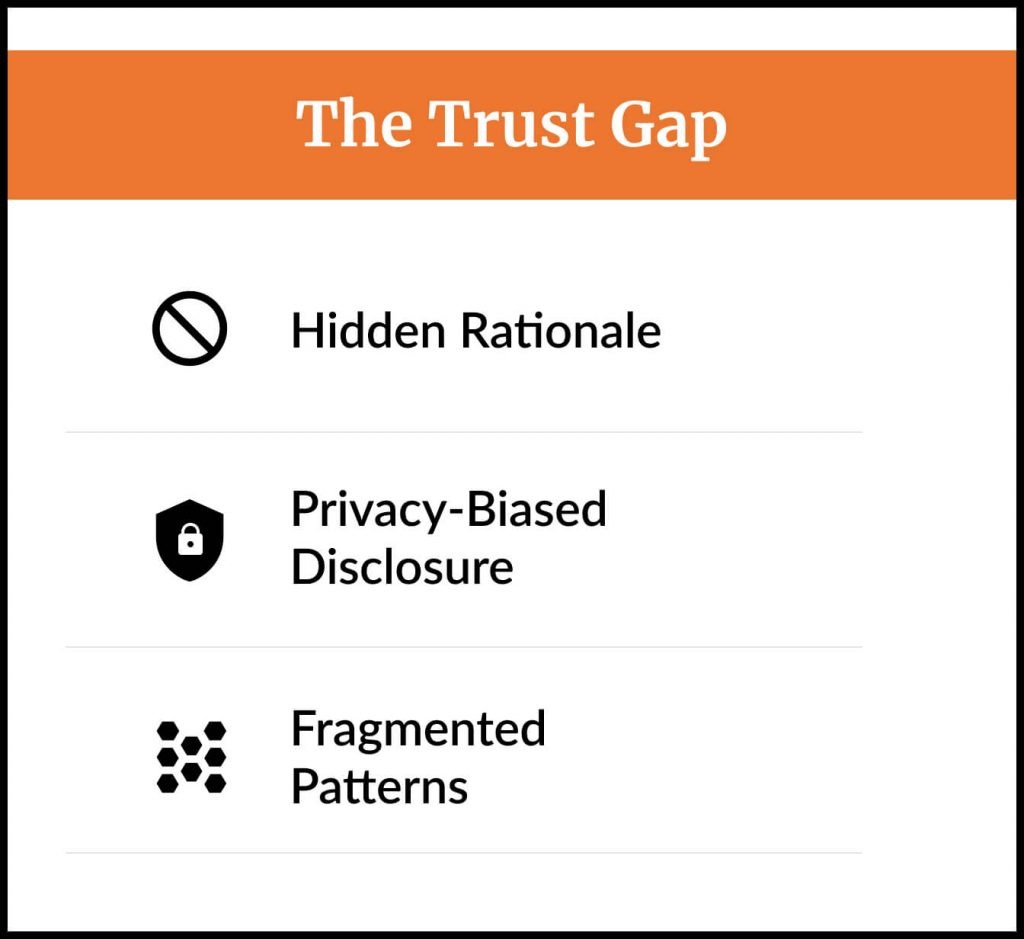

To design transparency into these interactions, we must address three core UX challenges.

1) Hidden Rationale

In telecom, AI-driven decisions often happen behind the scenes. A customer might see things like “We’ve adjusted your bill”, “We recommend switching to Plan Plus”, or “Your SIM has been temporarily paused for security” and without clear context, these statment look suspicious.

From a UX standpoint, the missing element is attribution of decision logic: explaining which data points, behaviours, or patterns triggered the outcome, in a form the customer can digest quickly.

Customers disengage when AI-driven outcomes appear without context. If a bill is adjusted or a service is recommended, the absence of a clear “why” creates uncertainty and distrust. The challenge is surfacing decision rationale in a way that feels integrated into the experience, not bolted on.

2) Privacy-Biased Disclosure

Telecom services rely on vast volumes of personal data: network usage, device types, location history, and payment patterns. This data richness enables powerful AI recommendations, but also carries risk of bias and perception of unfairness.

Bias Risk

A model might recommend offers less often to certain postal codes or flag certain names more frequently for fraud, even if unintentional.

Privacy Risk

Over-explaining AI decisions could inadvertently expose sensitive information, such as a precise location or call history.

Designers must walk a fine line: revealing enough detail to build trust without compromising privacy or security. This often requires abstraction and categorisation (e.g., “recent data usage” instead of “7.2 GB hotspot use on April 12”).

3) Fragmented Patterns

Even when explanations are present, they are often inconsistent across touchpoints. A “Why this?” component in the app may look different from the one in the web portal or the script an agent uses in a call. When the app, website, and agent tools each display explanations differently, customers must relearn how to interpret AI outputs every time. This inconsistency undermines trust.

For design teams, creating standard patterns and components like tool tips into the design system helps users. These components should have consistent placement within the flow, scalable language that works across channels and contexts, and a predictable hierarchy (headline, key reason, optional deep dive).

Designing transparent AI interfaces in telecom requires a thoughtful balance of explainability, control, consistency, and ethical considerations.

By making AI decision-making processes clear and relatable to both customers and employees, telecom providers can bridge the trust deficit and unlock the full potential of AI-driven experiences. This approach not only improves user satisfaction and operational efficiency but also ensures compliance with evolving regulations like the EU AI Act.

Ultimately, transparency is the key to transforming AI from a mysterious “black box” into a trusted and valuable asset in telecom business operations.

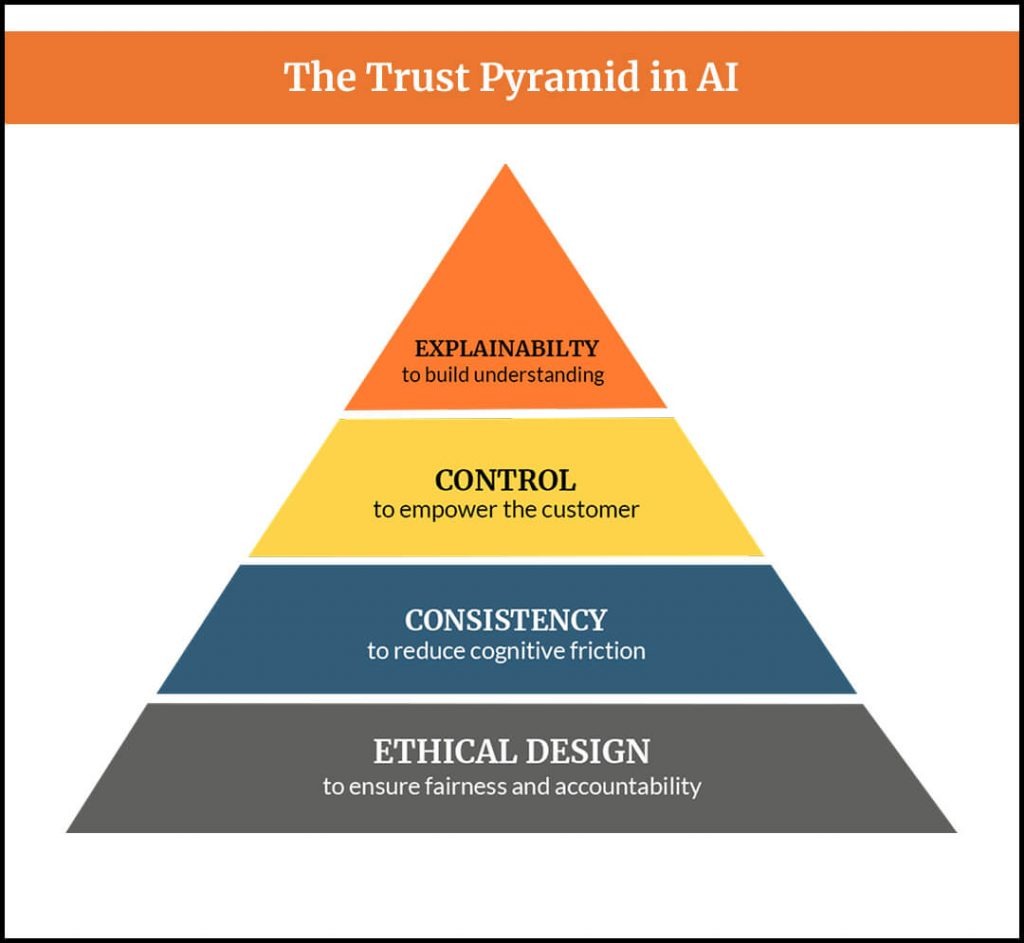

Designing AI Interfaces Customers Can Trust

Designing AI experiences that customers trust is not about adding a single transparency feature but about creating a coherent, multi-layered trust framework woven into every interaction. This framework rests on four pillars: Explainability, Control, Consistency, and Ethical Design.

1. Explainability: Making the “Why” Discoverable and Understandable

When an action is recommended, whether it’s upgrading a plan, applying a billing credit, or flagging a possible fraud incident, customers need to understand why. Without clear reasoning, the decision feels arbitrary, eroding trust and increasing the likelihood they will seek human intervention.

A well-designed explainability layer should:

- Surface the rationale in plain language, avoiding algorithmic jargon.

- Reference key, high-impact data points that influenced the decision (e.g., “You’ve exceeded your monthly data limit five times in the past 90 days” instead of “Usage anomaly detected”).

- Use progressive disclosure: begin with a short “Why this?” summary, followed by an optional “View details” link or expandable panel. This avoids overwhelming casual users while still supporting those who want depth.

- Incorporate visual aids like usage charts or simple icons to help customers connect the explanation to their own behaviour.

2. Control: Giving Users the Ability to Shape AI Outcomes

Trust increases when customers feel they have agency over automated decisions. AI that dictates without options risks being perceived as authoritarian or manipulative.

To embed control into the design:

- Offer opt-in and opt-out settings for AI-powered personalisation, ideally at the point of decision rather than buried in account settings.

- Allow overrides for high-impact or sensitive actions — such as reversing an AI-triggered account suspension or declining a suggested plan change.

- Provide alternative paths alongside AI-driven recommendations. For example, if AI suggests Plan X, also show Plan Y (rule-based) with clear trade-offs.

- Make escalation to a human agent visible and frictionless for cases where the user lacks confidence in the AI outcome.

3. Consistency: Creating Familiar Patterns Across All Touchpoints

Customers interact with telecom brands across multiple channels: mobile app, website, chatbots, retail stores, and contact centres. If the AI explains itself differently in each channel, customers have to relearn how to interpret it, creating unnecessary friction.

Consistency should be built into the design system so that:

- Components are identical across channels.

- Language style remains the same, regardless of whether it’s delivered in a chatbot conversation or a billing statement.

- Iconography and colours for confidence levels, alerts, or recommendations match across platforms.

- Back-end logic ensures that the same data is being used to generate explanations in every channel.

4. Ethical Design: Embedding Fairness, Safety, and Accountability from the Start

Ethical design is not an afterthought, it’s a foundational principle. Telecom providers should design with the assumption that every AI decision may be scrutinised by both customers and regulators.

Best practices include:

- Bias testing during model development to prevent discriminatory recommendations (e.g., ensuring offers are not skewed by geography or demographics).

- Aligning with recognised frameworks like the NIST Generative AI Profile, which outlines transparency measures, human oversight requirements, and risk mitigation strategies.

- Ensuring human-in-the-loop review for high-stakes decisions, especially those involving service termination, large financial adjustments, or fraud prevention.

- Designing for mental model stability, leveraging patterns customers already understand, and introducing changes only when supported by strong evidence of improvement.

To sum up, here’s why these four pillars work together:

Explainability builds understanding.

Control empowers the customer.

Consistency reduces cognitive friction.

Ethical design ensures fairness and accountability.

When combined, they transform AI from a “black box” into a trusted collaborator — one that users are more likely to adopt, rely on, and even advocate for.

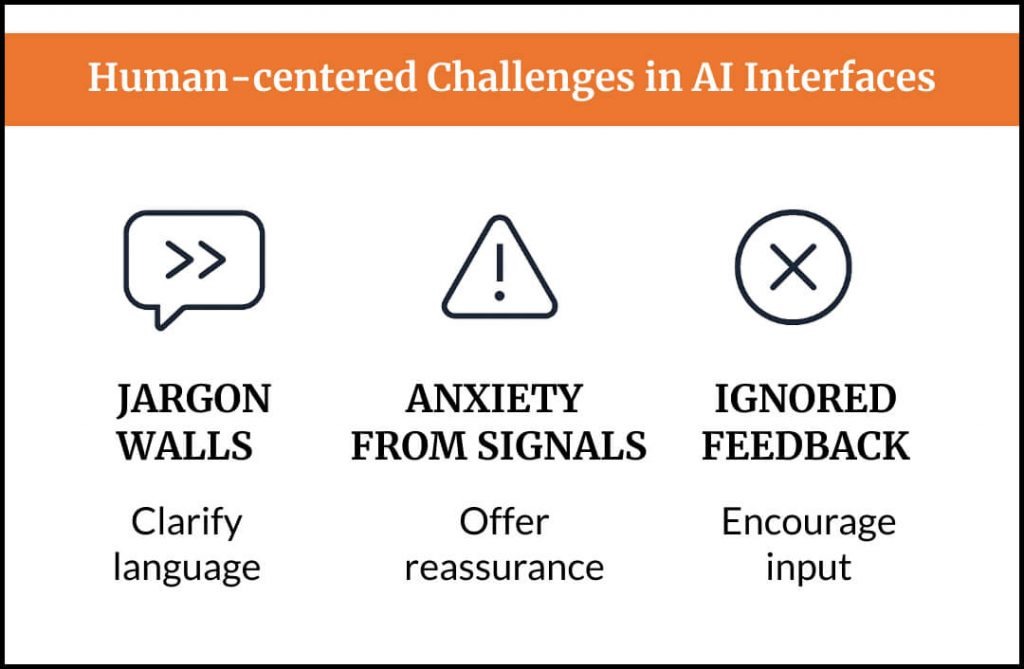

Making Human-Centric AI Interfaces

Transparency is not simply about showing the inner workings of algorithms but about making those algorithms relatable, approachable, and actionable for the people who interact with them. Even the most accurate AI will fail to gain adoption if customers feel alienated by the way its outputs are presented.

Designers can address this by focusing on solving three human-centred challenges:

1. Jargon Walls

AI explanations often use internal or technical terminology that means little to end users. Phrases like “predictive churn model” or “usage anomaly detection” may be meaningful to data scientists, but they create distance between the AI and the customer.

Recommended approach:

- Translate model outputs into plain, conversational language.

- Connect explanations to customer behaviour in familiar terms — e.g., “We think you might be unhappy with your service because you’ve called support three times in the past month.”

- Test wording with real users to ensure comprehension before rollout.

Example: Replace “Network quality degradation alert triggered by variance threshold breach” with “We’ve noticed your network speed has dropped below usual levels for the past 2 days.”

2. Anxiety from Signals

Confidence indicators (e.g., colour-coded badges, percentage scores) help users gauge reliability but can unintentionally create anxiety, especially when confidence is low. A “40% confidence” label without context may lead customers to mistrust the system entirely.

Recommended approach:

- Use neutral or supportive language around low confidence, guiding the user to safe next steps.

- Pair low confidence with a human-assist option — e.g., “We’re not certain. Would you like to review this with an agent?”

- Choose iconography and colours that communicate reassurance rather than danger for low-confidence states.

Example: If AI detects a possible billing error with low confidence, instead of showing a warning icon in red, show a neutral information icon with a message like, “We might have found a billing issue — let’s double-check together.”

3. Ignored Feedback

Feedback loops are often bolted on as an afterthought, leaving customers unsure if their input makes any difference. This leads to low participation and missed opportunities for model improvement.

Recommended approach:

- Make the feedback action quick and easy (one-tap confirm or contest).

- Show an immediate acknowledgement, then follow up when a change is made based on their input.

- Use this as a touchpoint to reinforce transparency, e.g., “Thanks for your feedback. We reviewed your case and adjusted the AI model to avoid similar errors.”

Example: After a user contests a roaming charge flagged by AI, send them an in-app notification when the charge is reversed, explicitly noting their feedback’s role in the decision.

Language shapes trust. Confidence cues shape reassurance. Feedback loops shape collaboration. When these elements work together, AI feels less like an opaque decision engine and more like a co-pilot that informs, collaborates, and learns alongside the customer.

Building Transparency for Enterprises and Employees Alike

AI trust is not solely a customer-facing concern. Employees, especially agents in contact centres, must also understand AI outputs to use them effectively. Without clarity, they may second-guess recommendations, leading to inconsistent service.

Internal AI dashboards should mirror the transparency principles used for customers. Agents should see the top factors influencing a recommendation, the system’s confidence level, and links to supporting data. Change logs that document updates to AI models can help employees adapt quickly when behaviour shifts.

Case studies reinforce this point. Verizon’s deployment of a Gemini-powered AI assistant to 28,000 sales and service reps in early 2025 cut handle times and boosted conversions. The critical factor? Providing explanations and source references directly in the agent workflow, allowing them to trust and act on AI suggestions without hesitation.

Case Insight: Explainable Virtual Assistants Reduce Escalations

One Tier-1 operator integrated explainable AI into its customer-facing virtual assistant. Instead of generic answers, the assistant provided concise reasons for its recommendations, displayed confidence levels, and offered a one-tap path to a human agent.

Within three months, adoption rates for self-service channels increased and escalations to live support decreased by double digits. This demonstrates a tangible ROI: transparency not only satisfies customers but also reduces operational strain.

Governance, Compliance, and the Design Advantage

AI in telecom is moving from an innovation conversation to a compliance conversation. Governments and regulators are no longer debating whether to set rules, they are finalising them.

The EU AI Act introduces staged obligations beginning in August 2025 for general-purpose AI, with full compliance for high-risk systems by August 2026. This means telecom operators deploying AI for customer decisioning, fraud detection, or service management will face strict transparency, documentation, and human oversight requirements.

The federal Artificial Intelligence and Data Act (AIDA) has stalled for now, but PIPEDA and guidance from the Office of the Privacy Commissioner continue to apply, especially around data collection, consent, and usage transparency.

Global Influence

Even outside Europe, the EU AI Act is expected to set a de facto global standard, influencing how compliance frameworks are shaped in Canada, the U.S., and other jurisdictions.

For telecoms, this poses an operational and design challenge that will determine how quickly AI can scale without regulatory roadblocks.

Design as a Compliance Engine

Well-designed transparency features are not just good UX, they are compliance assets. The same elements that improve customer trust also produce the audit artefacts regulators demand.

Explanations provide documented reasoning that meets EU AI Act traceability requirements. Confidence indicators show model logic to help assess risk. Logging features track inputs, outputs, and human overrides to maintain accountability.

Embedding these features early means compliance is not retrofitted at the last minute but baked into the product DNA. This reduces the cost and complexity of meeting regulatory standards while accelerating AI adoption.

Strategic Advantage Through Compliance-Ready Design

Telecom providers that align design with compliance now will:

- Avoid costly re-engineering when regulations take effect.

- Accelerate market trust by demonstrating readiness before competitors.

- Create scalable governance that applies to both customer-facing and internal AI systems.

Regulations may be an obligation, but compliance-aware design turns them into a competitive advantage.

Measuring AI Transparency and Trust

Building trust is not a one-off design project; it’s an ongoing performance discipline. Without measurement, teams cannot prove ROI, detect erosion in trust, or identify where interventions are needed.

1. Adoption & Experience Metrics

Measure how transparency patterns impact usage and satisfaction:

- Self-service completion rate: Higher completion indicates users trust automated flows.

- Reduction in escalations: Fewer handoffs to agents suggests improved AI credibility.

- Opt-out rates for AI personalisation: Declining opt-outs signal growing confidence in AI recommendations.

- Explanation helpfulness scores: Collected via quick in-flow rating mechanisms.

2. Fairness & Safety Metrics

Ensure AI is operating without bias or unintended harm:

- Disparate impact analysis: Monitor differences in recommendations, approvals, or alerts across demographics, geographies, and customer segments.

- False positive rates: Particularly in fraud detection or service disruption alerts — with targets for rapid resolution.

3. Governance & Quality Metrics

Track the health of your AI systems and the reliability of transparency mechanisms:

- Model change failure rate: Percentage of releases that introduce unexpected decision errors.

- Explanation payload coverage: Proportion of AI decisions with a stored, retrievable explanation record.

- Override rates: Frequency and context of human overrides, which can indicate where the AI still lacks user or agent trust.

Continuous Improvement Loop

Metrics are only valuable if they drive change. Telecom providers should review these metrics quarterly at the product governance level, feed insights back into both model training and UX pattern refinement, and tie performance targets to cross-functional KPIs to ensure design, engineering, compliance, and operations share accountability for AI trust.

This approach transforms trust and transparency from vague values into quantifiable, actionable drivers of both compliance and competitive differentiation.

Final Takeaway: The Future of AI Transparency

Looking ahead, AI transparency will become even more critical as AI technologies evolve and integrate deeper into telecom and other industries. Future advancements will likely focus on creating AI systems that are not only transparent but also inherently trustworthy, embedding explainability and ethical considerations throughout the entire AI lifecycle.

Telecom providers and AI developers will need to adopt more sophisticated transparency tools, such as real-time transparency reporting, dynamic model interpretability, and advanced human oversight mechanisms, to keep pace with rapidly evolving AI models, including large language models and generative AI.

As regulatory frameworks like the EU AI Act mature and new global standards emerge, compliance will require continuous adaptation and proactive governance. Transparency efforts will extend beyond technical explanations to encompass ethical and societal dimensions, ensuring AI systems align with responsible AI development principles and respect data protection and privacy rights.

Moreover, the future of AI transparency will emphasize collaboration across the AI ecosystem, bringing together developers, regulators, users, and other stakeholders to foster knowledge sharing and build AI systems that deliver fair, accountable, and trustworthy outcomes. The challenge will be balancing transparency with protecting intellectual property and user privacy while maintaining AI performance and innovation.

Ultimately, AI transparency won’t just be a compliance checkbox or a customer-facing feature; it will be a foundational pillar of responsible AI deployment, driving public trust, enabling AI accountability, and shaping the future of human-centric AI experiences in telecom and beyond.